Let’s build an Ansible Playbook to create the testing

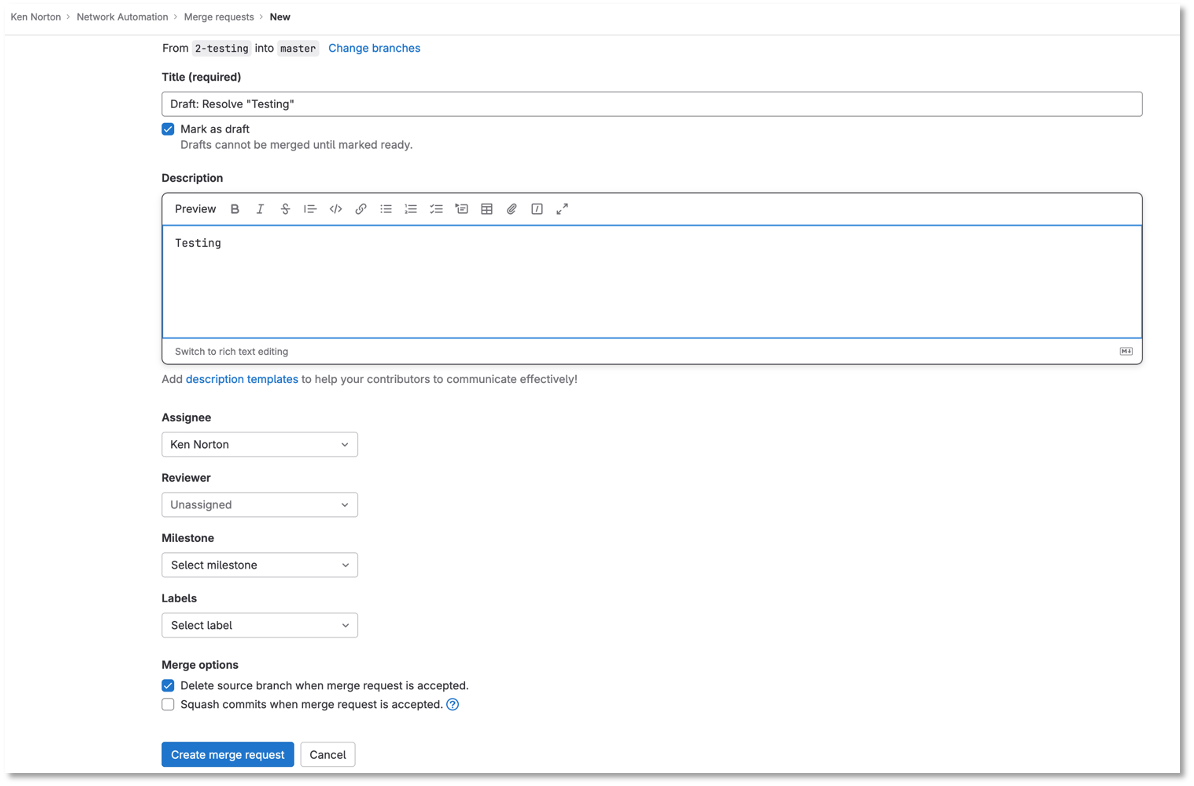

Let’s use Visual studio code to review the tests folder

Let’s review the files in the tests directory

Figure 3: Tests File Structure

Let’s review the snapshots.yaml file:

This playbook will create a backup of the switch configurations for Batfish to use as a model.

---

- name: CAPTURE DATE AND CREATE DIRECTORY

hosts: localhost

tasks:

- name: Capture Date

command: date +"%Y-%m-%d"

register: time

changed_when: false

delegate_to: localhost

- name: Create Directory

file:

path: /home/gitlab-runner/network-automation/configs (1)

state: directory

run_once: yes

- name: BACKUP ARISTA SWITCHES

hosts: eos

gather_facts: false

connection: network_cli

tasks:

- name: ARISTA SWITCH CONFIG

eos_command:

commands: show run

register: output

- name: COPY SWITCH CONFIGS

copy:

content: "{{ output.stdout[0] }}"

dest: "/home/gitlab-runner/network-automation/configs/show_run_{{ inventory_hostname }}.txt" (1)

| 1 |

This is the location of where the configurations will be stored for Batfish to model.

--- |

Let’s review the Batfish.py file:

This is a python script to leverage the pybatfish model.

Batfish uses a series of questions to test the network.

The answers to the questions are based on the modeling done by Batfish against the configurations.

One of the benefits of Batfish is that it does not need the switches to be up and running to test functionality.

# Modules

from pybatfish.client.commands import bf_init_snapshot, bf_session

from pybatfish.question.question import load_questions

from pybatfish.question import bfq

import os

# Variables

bf_address = "127.0.0.1"

snapshot_path = "/home/gitlab-runner/network-automation/"

output_dir = "/home/gitlab-runner/network-automation/"

# Body

if __name__ == "__main__":

# Setting host to connect

bf_session.host = bf_address

# Loading confgs and questions

bf_init_snapshot(snapshot_path, overwrite=True) (1)

load_questions()

# Running questions

r = bfq.nodeProperties().answer().frame() (2)

assert r.empty is False (3)

print(r)

r1 = bfq.nodeProperties(properties="SNMP_Trap_servers").answer().frame() (2)

assert r1.empty is False (3)

print(r1)

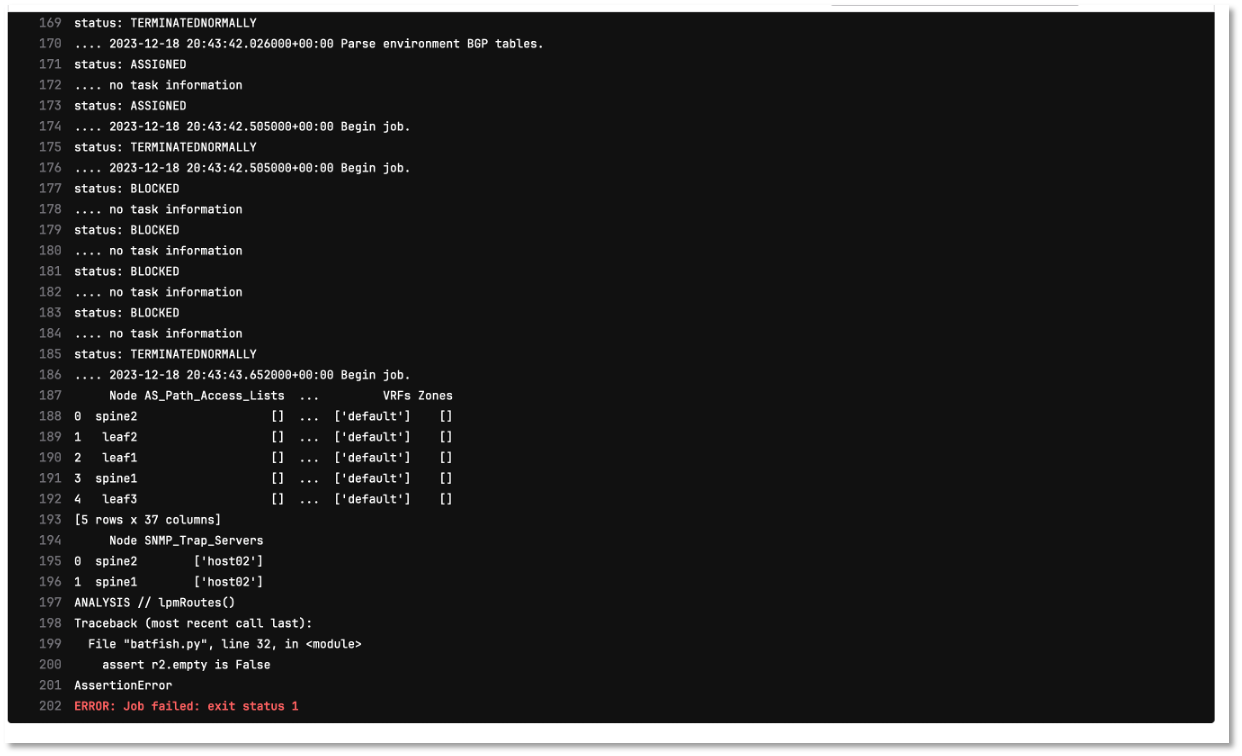

print("ANALYSIS // lpmRoutes()")

r2 = bfq.lpmRoutes(ip='192.168.14.1').answer().frame() (2)

assert r2.empty is False (3)

print(r2)

# Saving output

if not os.path.exists(output_dir):

os.mkdir(output_dir)

r.to_csv(f"{output_dir}/results.csv")(4)

| 1 |

Here is where we are loading the configurations |

| 2 |

Here are the questions for Batfish |

| 3 |

We are leveraging the python assert function to check if the answer is not empty |

| 4 |

This is full output of the Batfish model |

In the previous section we automated a change to add vlan 14 and subnet 192.168.14.0/24 to the leaf3 switch

As part of the Containerlab deployment, we created 3 Linux container clients

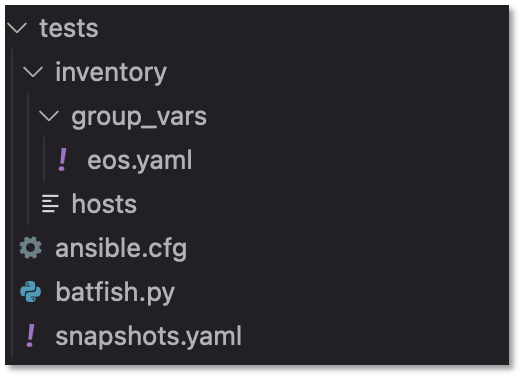

Let’s add a new test stage to the CI/CD file → .gitlab-ci.yml

stages:

- build

- stage

- change

- test (1)

- backup

| 1 |

Add the test stage to the .gitlab-ci.yml file |

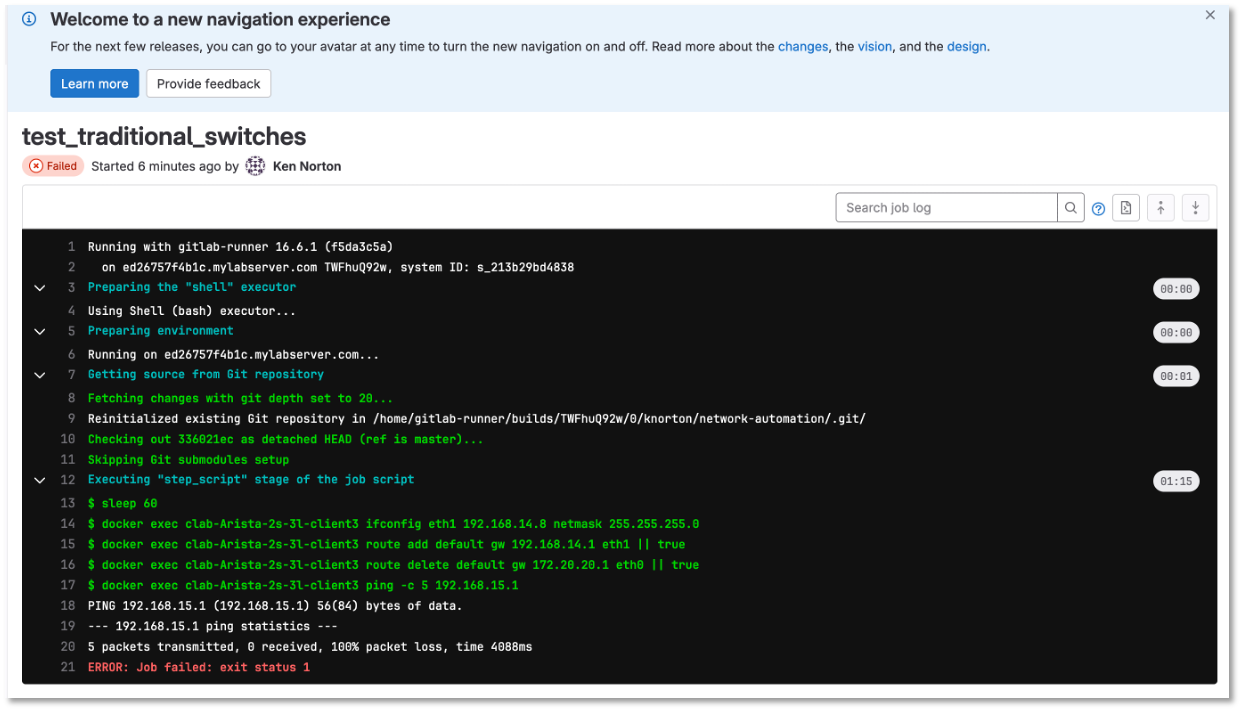

Since the Linux clients are docker containers

We can use the Docker Exec command to update the networking stack on third Linux client

Lets add an IP address of 192.168.14.8 to eth1 of the Linux client

Lets add the default route to point to the new vlan interface of 192.168.14.1

Delete the existing default route

Add a ping and traceroute to test the change

Add this job between network_change and the backup_switches

test_traditional_switches:

stage: test

before_script:

- sleep 60

script:

- docker exec clab-Arista-2s-3l-client3 ifconfig eth1 192.168.14.8 netmask 255.255.255.0

- docker exec clab-Arista-2s-3l-client3 route add default gw 192.168.14.1 eth1 || true

- docker exec clab-Arista-2s-3l-client3 route delete default gw 172.20.20.1 eth0 || true

- docker exec clab-Arista-2s-3l-client3 ping -c 5 192.168.14.1

- docker exec clab-Arista-2s-3l-client3 traceroute 192.168.11.1

Now lets reference the batfish.py file in the .gitlab-ci.yml file in a new job

Add this job between network_change and the backup_switches and after test_traditional_switches job

test_model_switches:

stage: test

before_script:

- cd tests

- docker rmi -f $(docker images -q --filter=reference="batfish/allinone:latest") || true

script:

- docker run -d --restart=always --name batfish -v batfish-data:/data -p 8888:8888 -p 9997:9997 -p 9996:9996 batfish/allinone || true

- ansible-playbook snapshots.yaml -v

- python3 -m venv venv

- source venv/bin/activate

- pip install pybatfish

- python3 batfish.py

Now lets go push the changes to the remote repository

|

|

Remember to save the files first!

|

Run the following commands below to push the code and kick off the pipeline

cd ~network-automation/tests/

git add .gitlab-ci.yml

git commit -m "added test changes"

git push origin 2-testing

Here is the progression of the commands above

kennorton@C02G71AFMD6P-knorton:~/network-automation$ git add .gitlab-ci.yml (1)

kennorton@C02G71AFMD6P-knorton:~/network-automation$ cd tests/

kennorton@C02G71AFMD6P-knorton:~/network-automation/tests$ git branch (2)

1-network-change

* 2-testing

master

kennorton@C02G71AFMD6P-knorton:~/network-automation/tests$ git commit -m "added test changes" (3)

[2-testing 19301d9] added test changes

1 file changed, 9 insertions(+), 5 deletions(-)

kennorton@C02G71AFMD6P-knorton:~/network-automation/tests$ git push origin 2-testing (4)

Username for 'http://ed26757f4b2c.mylabserver.com': knorton

Password for 'http://knorton@ed26757f4b2c.mylabserver.com':

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 2 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 431 bytes | 431.00 KiB/s, done.

Total 4 (delta 3), reused 0 (delta 0)

remote:

remote: View merge request for 2-testing:

remote: http://ed26757f4b2c.mylabserver.com/knorton/network-automation/-/merge_requests/6

remote:

To http://ed26757f4b2c.mylabserver.com/knorton/network-automation.git

60f97a8..19301d9 2-testing -> 2-testing

| 1 |

Adding the modified .gitlab-ci.yml file to the local repository |

| 2 |

Checking to make sure we are working on the correct git branch |

| 3 |

Commiting the latest changes to the local repository |

| 4 |

Push the changes to the 2-testing remote repository on the Gitlab-CE server

--- |

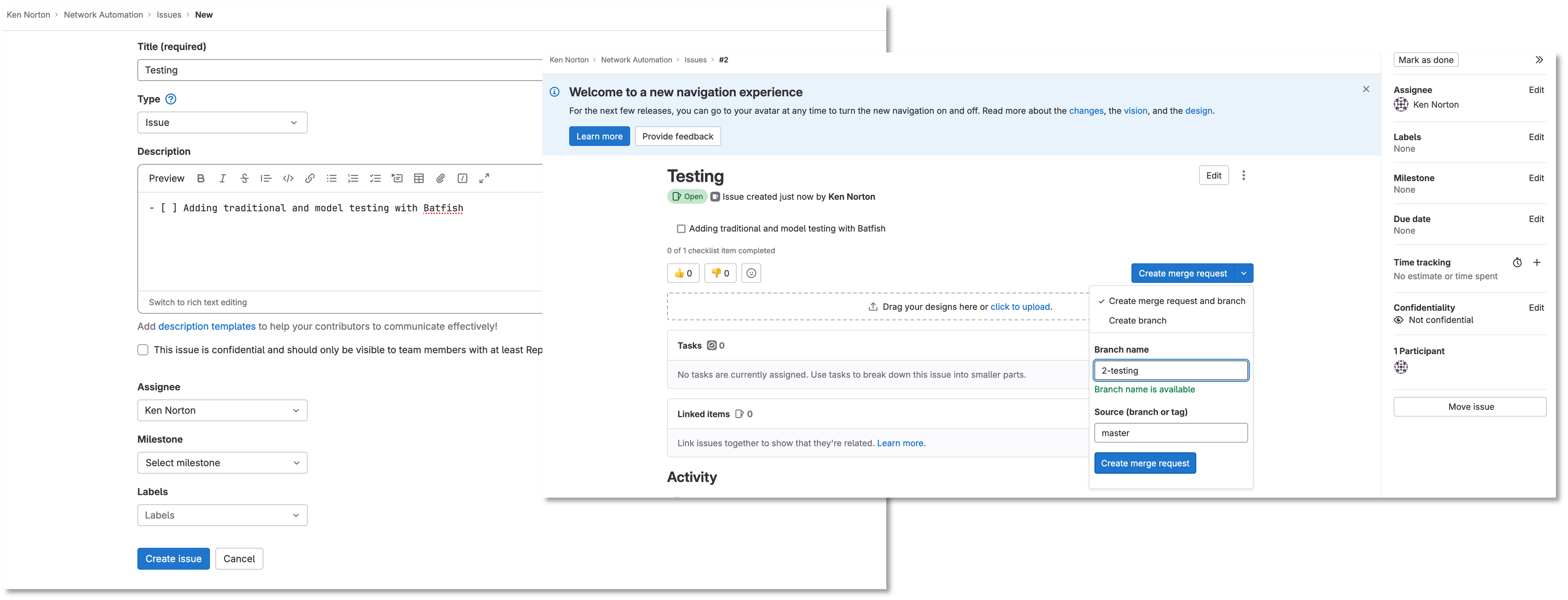

Login to the GitLab-CI as a user

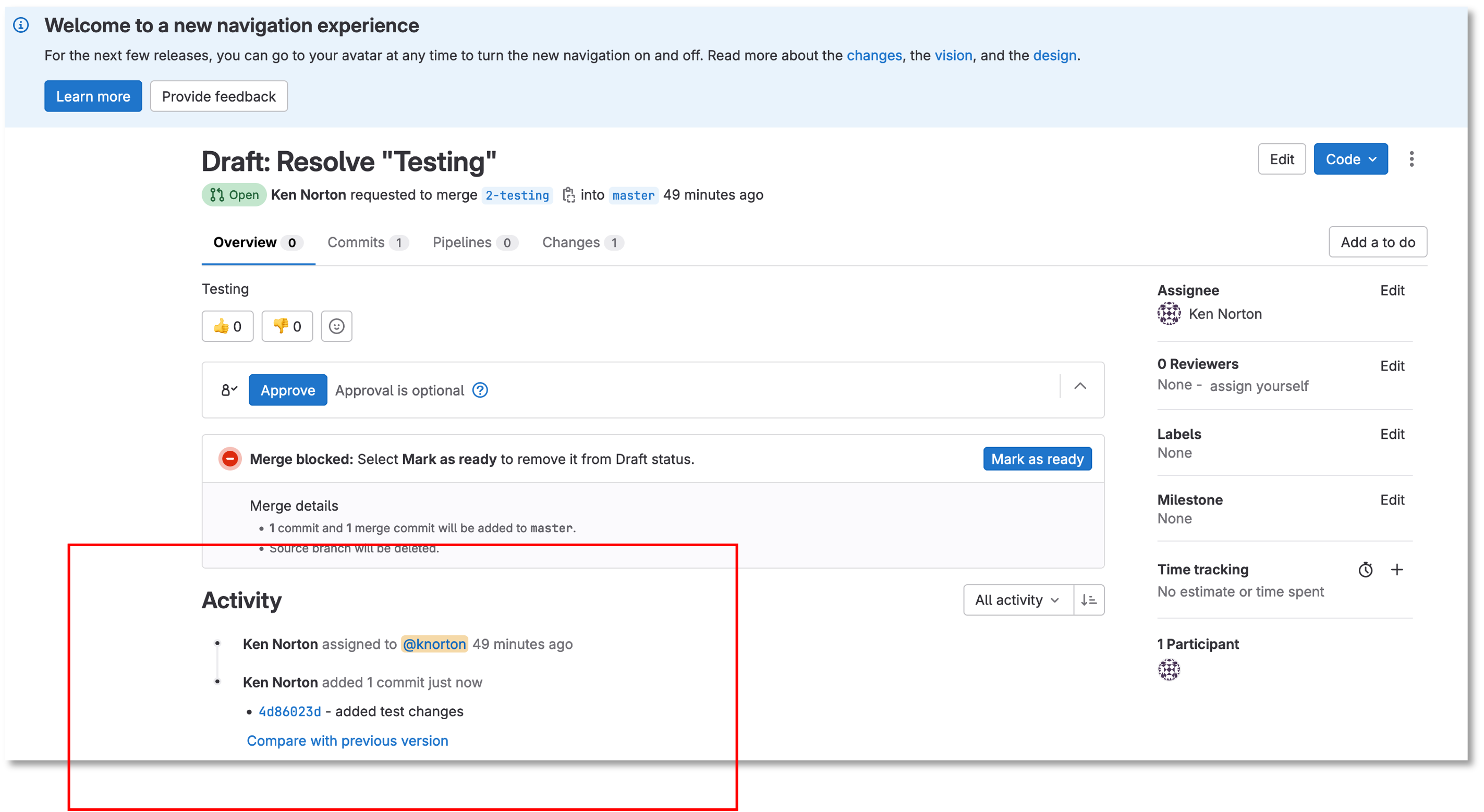

Notice the Merge Request update – click Merge Requests and select the recent merge request

Under Activity you can review the changes

Click the 4d86023d link to review the changes (the link ID will be unique)

Click Resolve conflicts if needed

Click Mark as ready

In case you are getting an error with your .gitlab-ci.yml file

Here is a copy of the complete file at this point

Working with YAML files can be challenging

---

workflow:

rules:

- if: $CI_COMMIT_TAG

when: never

- if: $CI_COMMIT_BRANCH == 'master'

stages:

- build

- stage

- change

- test

- backup

build_switches:

stage: build

before_script:

- cd infra

script:

- sudo containerlab destroy -t ceos_2spine_3leaf.yaml || true

- sudo -E CLAB_LABDIR_BASE=/var/clab containerlab deploy -t ceos_2spine_3leaf.yaml || true

staging_switches:

stage: stage

before_script:

- cd build

script:

- sleep 60

- pip install ansible-pylibssh

- ansible-galaxy collection install arista.eos

- ansible-playbook build.yaml -v

network_change:

stage: change

before_script:

- cd change

script:

- ansible-playbook change.yaml -v

dependencies:

- staging_switches

test_traditional_switches:

stage: test

before_script:

- sleep 60

script:

- docker exec clab-Arista-2s-3l-client3 ifconfig eth1 192.168.14.8 netmask 255.255.255.0

- docker exec clab-Arista-2s-3l-client3 route add default gw 192.168.14.1 eth1 || true

- docker exec clab-Arista-2s-3l-client3 route delete default gw 172.20.20.1 eth0 || true

- docker exec clab-Arista-2s-3l-client3 ping -c 5 192.168.14.1

- docker exec clab-Arista-2s-3l-client3 traceroute 192.168.11.1

test_model_switches:

stage: test

before_script:

- cd tests

- docker rmi -f $(docker images -q --filter=reference="batfish/allinone:latest") || true

script:

- docker run -d --restart=always --name batfish -v batfish-data:/data -p 8888:8888 -p 9997:9997 -p 9996:9996 batfish/allinone || true

- ansible-playbook snapshots.yaml -v

- python3 -m venv venv

- source venv/bin/activate

- pip install pybatfish

- python3 batfish.py

backup_switches:

stage: backup

before_script:

- cd backup

script:

- ansible-playbook playbooks/git_backup.yaml -v

dependencies:

- staging_switches

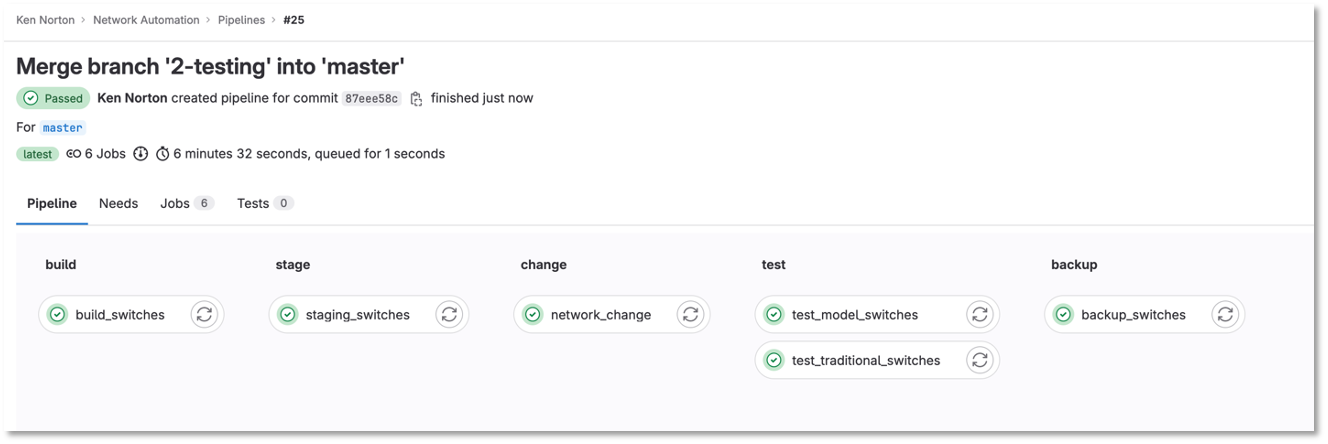

Under the build section

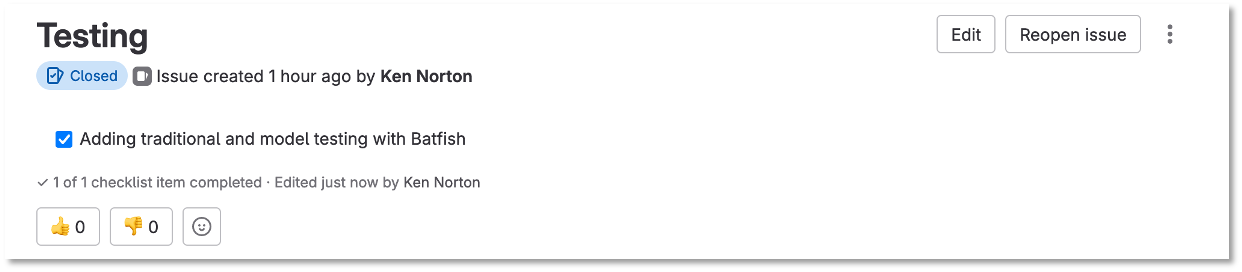

When the pipeline completes the issue will automatically close

Go back into the issue and check the checkboxes if it was successful

Figure 6: Close The Issue